This site uses cookies in accordance with our Privacy Policy.

3.7: Networking for WordPress Administrators

To be an effective WordPress website administrator and to run secure websites requires a knowledge of networking fundamentals. You need to understand what an IP address is, the difference between IP version 4 and version 6, who owns an IP address or address range and so on.

Rather than try to give you a few snippets of incomplete information, this article attempts to give you a complete intermediate level understanding of networking. This will empower you not just in your website administration, but it is knowledge that you will use repeatedly as long as your work exposes you to the Internet at any technical level. You will probably find yourself noticing gaps in the knowledge of your co-workers once you’re finished here and will hopefully be able to help fill them.

In this article we’ll often refer to the concept of a network ‘protocol’. A protocol is an agreed method that two machines, operating systems or applications choose to use to talk to each other. It’s like one person agreeing with another that one flash from a flashlight means “everything is OK” and three flashes means “go and get help”. A protocol is the same thing but includes things like how a message should be packaged up and special things that happen at the start and end of a conversation.

We will also refer to the term ‘packets’. A packet is a chunk of binary data that is passed across a network. When data is sent across a network it is not sent in a continuous stream. Instead it is broken into chunks or ‘packets’ and these packets are given addressing information, kind of like an envelope that contains an address. Routers on the network then pass those packets across the network, routing them to their destination address.

But we’re getting ahead of ourselves. Let’s start right at the beginning with the OSI model and how a network is structured from the ground up.

The OSI Model

Remember when one of your lecturers or teachers at school would tell you something is only theoretical, and your knowledge deflector shields would go up? Well I’m going to teach you something theoretical. The difference is that network administrators and engineers refer to the OSI model almost daily in their work. It’s an excellent metaphor that helps describe and categorize every part of a network.

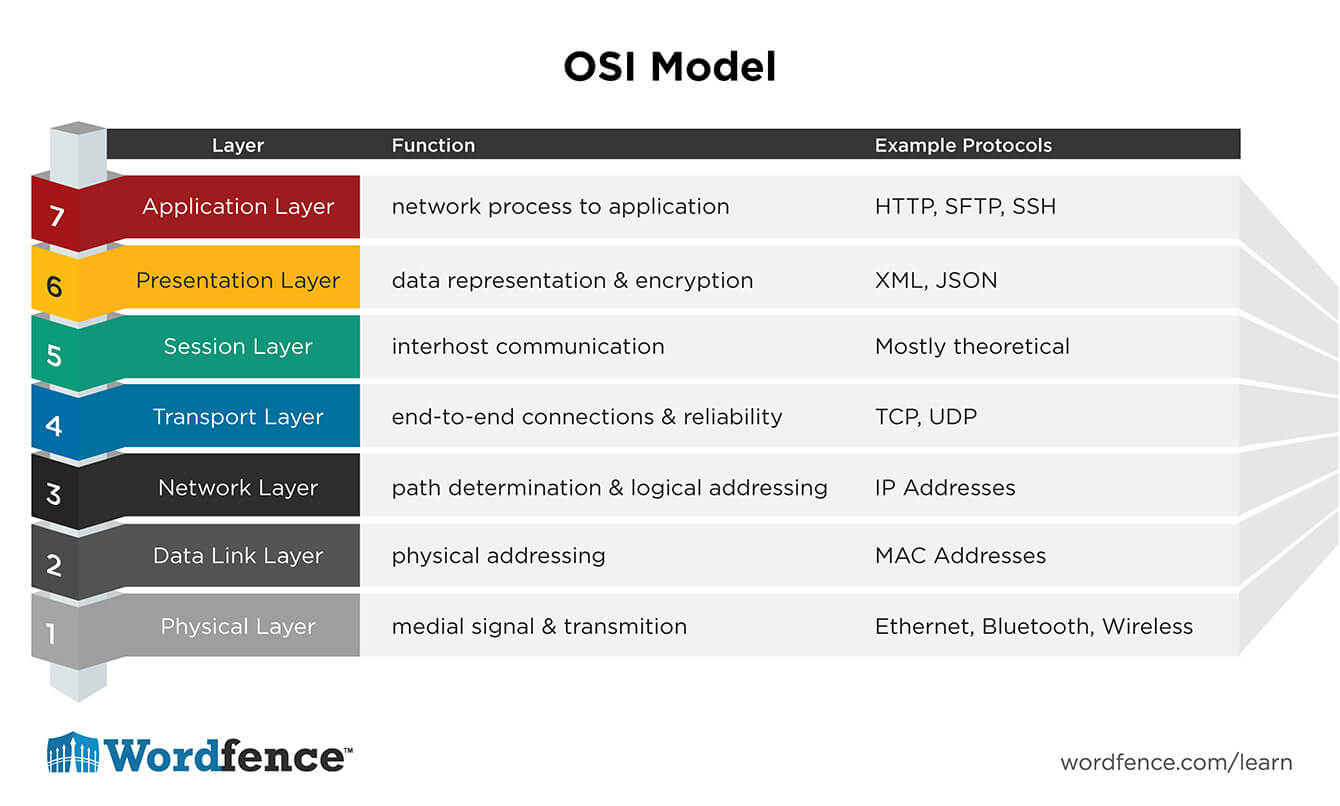

The OSI model is a layered model that represents the various parts of a network starting at the physical layer (Layer 1, network cabling) all the way up to the application layer – layer 7.

The layers of the OSI model are: Physical, Data-Link, Network, Transport, Session, Presentation, Application

These layers are numbered, so the Physical layer is layer 1, Data-link is layer 2 and so on up to Application which is layer 7.

Being able to remember the layers in the OSI model is extremely helpful. A trick we use is the following mnemonic: Please, Do, Not, Take, Sales, Person’s, Advice. Some of us have been using this mnemonic for more than 20 years to remember that, for example, Layer 3 is the Network layer (Not) or Layer 7 is the application layer (Advice).

Here is a brief summary of what each layer in the OSI model does:

The Physical Layer (layer 1)

This is your physical network cabling, wireless radio signals, the electrical signaling waveforms that occur on your network cable and the modulation and demodulation of radio signals in wireless networking. The physical layer is as close to the wire as you can get.

The Data-link Layer (layer 2)

Layer 2 and the concept of “layer 2 networking” is important and along with layer 3 it is the most frequently referred to layer of the OSI model in networking conversations. Usually you won’t hear layer 2 referred to as “the data-link layer” in conversation. It will simply be referred to as ‘layer 2’.

The data link layer describes how a reliable connection occurs between two physical nodes that are directly connected. For example, a network switch may be connected to an ethernet card in a machine. The protocols on the wire that provide error correction and establish half or full-duplex transmission are layer 2 data-link layer protocols.

The Media Access Control or MAC protocol occurs at layer 2. Every network card is given a MAC address that is unique in the world. A MAC address is an address that identifies a physical machine. This differs from the local addresses you’ll find in layer 3.

An example of a MAC address is:

68:5b:35:8e:4d:21

The address uses 12 hexadecimal numbers to identify a machine. Hexadecimal ranges from 0 to 9 then A to F.

Half of the MAC address (in bold above) is a vendor code and the second half is a unique ID. You can enter a mac address into a vendor lookup database and be told which vendor produced the machine the address belongs to. These addresses are used to uniquely identify machines on a local area network and they help a switch send traffic to the correct machine on a local network. All of this happens at layer 2.

When you hear someone refer to “layer 2 networking” or a “layer 2 switch”, what they’re referring to is packets routed on a local network using machine MAC addresses. Usually layer 2 networking is handled by a switch which maintains a table of MAC addresses and routes packets between machines on your local network.

Devices on layer 2 are Switches and Bridges. Both of these connect local area network segments and they maintain tables of MAC addresses to route packets around on the local network. If you’d like to learn more about layer 2 networking, read up about switches (bridges are seldom used these days because switches are now more affordable than they used to be).

The Network Layer (layer 3)

Layer 3 or the Network layer along-side layer 2 is the most important and most referred to layer of the OSI model. You will usually hear it referred to as “layer 3” or “the network layer” interchangeably.

Layer 3 describes routers, IP addresses and the mechanisms that are used to route packets between local area networks and across wide area networks. Routing packets between networks and across the Internet requires the use of logical addresses. These are called IP addresses. Routers use routing tables to determine how to get a packet from a machine on one side of the world to the other. All this happens at layer 3.

When you hear the phrase “layer 3 networking”, a network engineer is referring to the routing of packets between machines using their IP addresses.

The Transport Layer (layer 4)

You may be able to use Layer 3 to send packets across the Internet, but you can’t use it to maintain connections. That’s where layer 4 comes in. Layer 4 or The Transport Layer is what maintains connections between machines. The protocol responsible for this, and that operates at layer 4 on the Internet is called TCP.

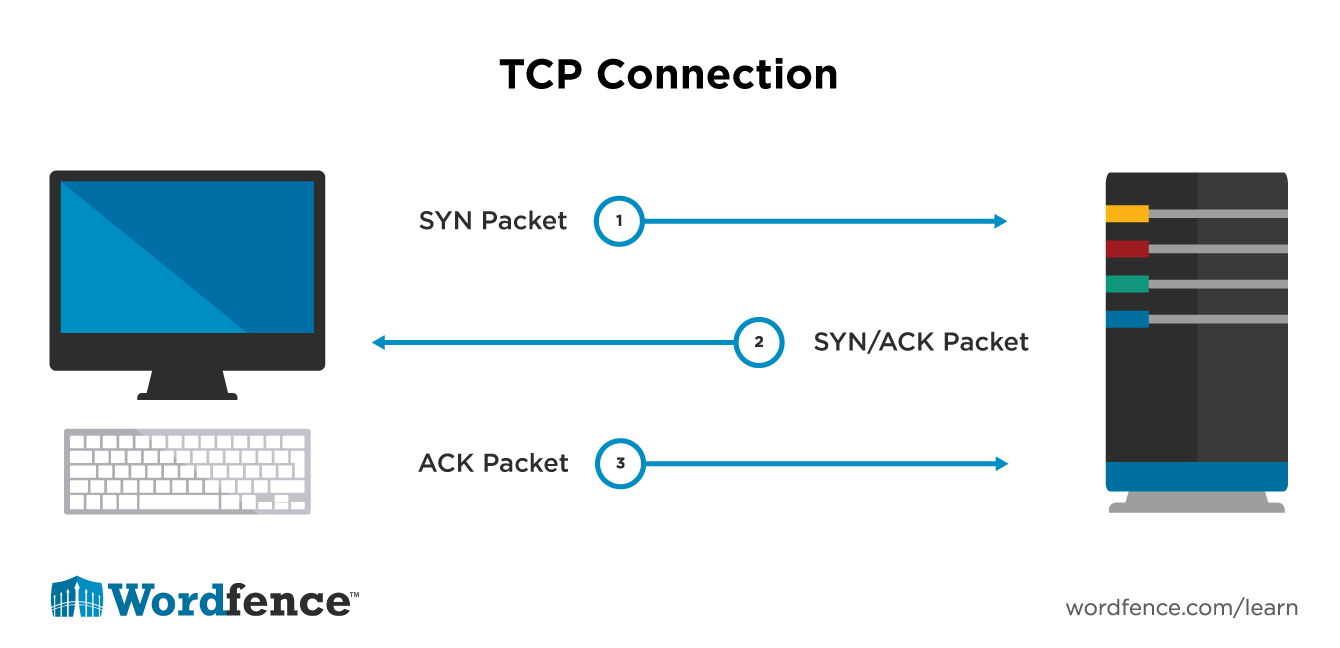

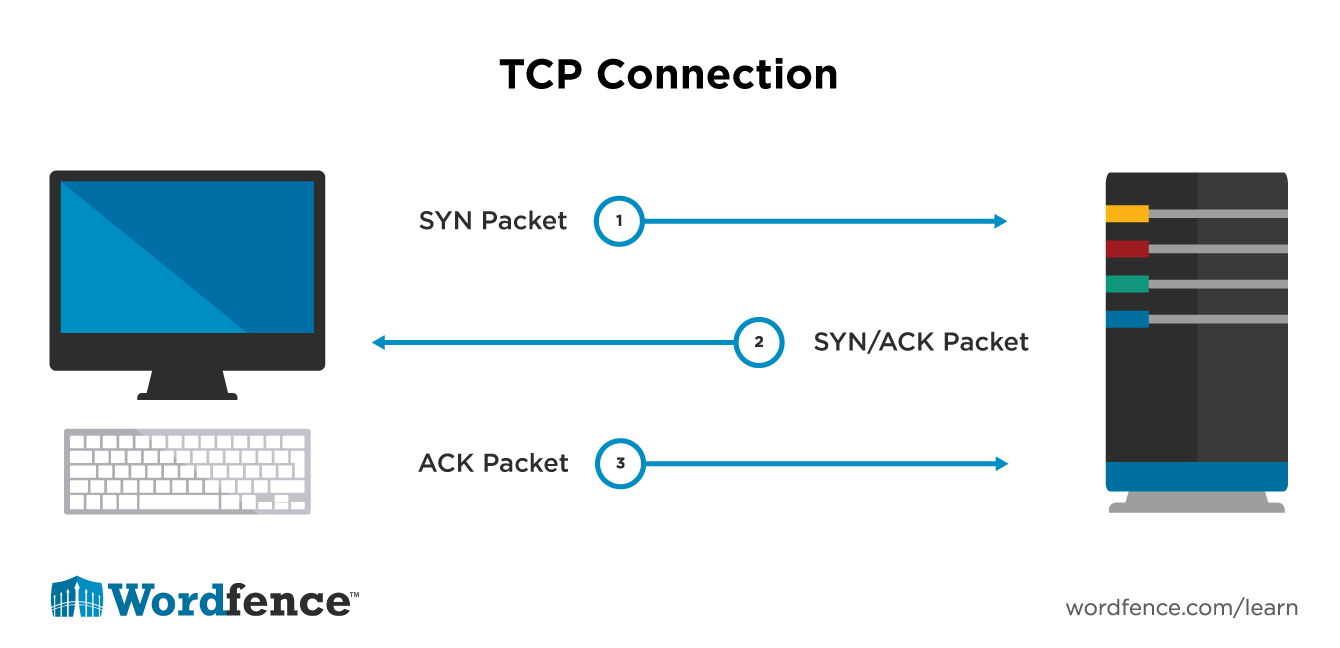

TCP is a layer 4 protocol and it establishes a connection between machines using a three way handshake. A machine that wants to connect to another will send a packet with the SYN flag set. The server being connected to will respond with a packet with the SYN and ACK flags set. And then the machine that started the connection responds with a packet with the ACK flag set. Once complete, a TCP connection is established between the two machines.

When you connect to a web server, a TCP connection is established. Other examples of TCP connections are SSH, sFTP, receiving email via POP or IMAP and connecting via HTTPS. There are many other examples.

Remember that the Transport Layer, or layer 4 is responsible for maintaining connections on the Net and that TCP lives at layer 4.

The Session Layer (layer 5)

The session layer is not often referred to in networking. It provides functions like graceful termination of connections. It is also referred to in remote procedure calls. Unfortunately the session layer does not really apply to TCP/IP and the Internet suite of protocols, so it is mostly a theoretical part of the OSI model.

The Presentation Layer (Layer 6)

This layer is sometimes called the syntax layer. It isn’t often referred to, but in general the Presentation Layer is responsible for converting application data to a syntax that is ready for network transport using lower layer protocols.

An example of a Layer 6 protocol or syntax is XML, JSON or objects that have been serialized into a structure that can be transported across the network.

The Application Layer (Layer 7)

Layer 7 is the layer closest to the end user and refers to all the networking functions provided by an application. For example, Skype is a voice-over-IP networking application. It relies on lower layer protocols to transport it’s data across the network. But the encoding of your voice into binary before it is handed to lower layers of the OSI is done by Skype itself using a “codec”. This encoding of your communications happens at Layer 7. Skype also may add some addressing and ordering data to the voice stream before it gets handed to lower level protocols and these are Layer 7 or “application layer” protocols.

Most Internet application protocols are layer 7 protocols. This includes HTTP, HTTPS, SMTP, FTP, SSH, SCP, POP, IMAP, Rsync, SOAP, Telnet and most other applications on the Net that you recognize. When you hear the phrase “layer 7 filtering” it means that a network device is inspecting packets as deep as possible, all the way into the application data.

Besides Layer 2 and 3, Layer 7 is also referred to by network engineers. When your website experiences a distributed denial of service or DDoS attack, a hacker tricks many machines on the Internet to send traffic to your website at the same time. It is difficult for routers (at layer 3) and switches (at layer 2) to filter out that traffic with the limited information they have access to.

What your network provider will do to stop the DDoS attack is to route all incoming traffic via layer 7 filters. These filters perform deep packet inspection beyond just layer 3, which is all routers will look at. Layer 7 filters inspect packets all the way up the OSI into layer 7 and look at the application data the packets contain. They then use this information to determine if traffic is legitimate or an attack and will filter out attacking traffic.

How packets travel from one machine to another via the OSI model

It helps to think of a packet starting at the application layer, with say a web browser communicating via HTTP. As the packet leaves the browser, it travels down the layers of the OSI, with each layer adding some information along the way. The information is either changed by a layer of the OSI or a layer will add some additional addressing or session information.

- The application layer creates the application data

- The presentation layer encodes it into a syntax that’s transportable

- The session layer may add RPC information to a packet or may add some connection oriented data.

- The transport layer adds TCP flags that establish the order of the packet

- The network layer adds source and destination IP address data and a few flags

- The data link layer will add the destination MAC address of the physical router the packet will be sent to on it’s way out to the Internet

- The physical layer will encode the packet into little square electronic waves and put those waves on the physical wire.

The packet will be sent across the Internet and as it reaches network devices, each device will decode the packet usually only up to layer 3 and may re-encode it slightly differently, adding a new MAC address or changing a few flags here and there as it travels along.

When the packet reaches the destination machine it traverses back up the OSI model as follows:

- The physical layer decodes those little square electronic waves as they come off the wire

- The data-link layer decodes the MAC information and verifies this packet belongs to our physical network card

- The network layer strips off the IP address information and may make decisions like “Am I going to allow this IP address past my firewall rules?”

- The transport layer looks at the TCP sequence of the packet and may do some packet reassembly.

- The session layer may inspect remote procedure call data or may determine that a connection needs to be gracefully closed or maintained open.

- The presentation layer decodes any XML, JSON, serialized objects or other network data into a form applications can understand.

- The application layer receives the application data and a protocol like HTTP might determine that a response has just been received from an HTTP server and will pass that data into a browser so that it can be displayed to the user.

If you can visualize this transition of data from a source machine, down through the OSI layers, along the network and its receipt at a destination and its transition back up the OSI layers, it will help you understand the OSI model. As the data travels down from a source machine, data is added at each layer until layer 1, then as the data travels back up the OSI model on receipt, data is stripped off until only the application data is left.

In conversation with other network engineers and enthusiasts, you will find yourself referring to Layer 2 when talking about local area networks, Layer 3 when discussing routers and firewalls that inspect IP addresses, and Layer 7 when discussing application layer data.

TCP/IP Introduction

Before the Internet came along and even some time after it was invented, there were a variety of network protocols. IPX was very popular at the time because Novell NetWare was the most popular file server for local area networks. NetBIOS was also very popular and provided a way for Microsoft computers to talk to each other on a local network, but not across wide area links because it was a non-routable protocol.

TCP/IP became popular in the early 90’s because it provided a way for computers running on different kinds of local area networks (Novell or Microsoft) to communicate with each other. And TCP/IP was routable over very large networks, so these computers could now communicate with other machines around the world.

The name TCP/IP refers to ‘IP’, which is the part of the Internet protocol that provides layer 3 routing and to ‘TCP’, which is the part of the protocol that allows for connections to be established between machines. It really should just be called ‘IP’ because everything rides on top of the Internet Protocol which provides the basic routing functionality. TCP simply provides connections on top of IP. UDP, or User Datagram Protocol provides for communication using single packets and is an alternative to TCP – so the protocol could also be called UDP/IP. But for some reason the World settled on TCP/IP and so that’s what we call the protocol that runs the Internet.

When you hear someone refer to an “IP stack” or a “TCP/IP stack”, what they’re referring to is the part of an operating system like Windows, OS X or Linux that allows that operating system to talk to a network using TCP/IP. It’s network slang that is a fancy way of saying “the drivers and software that an operating system uses to talk to a TCP/IP network”.

MAC Addresses and Local Networking

Before the Internet came along, we only had “local area networks”. These were networks isolated from the rest of the world that gave machines in the same building or local area the ability to communicate. Sometimes a local area network would be as simple as a boss’s machine, her secretary’s machine and a printer on one of the machines that the other one also has access to. They might also share a few files using a basic file sharing system.

We briefly discussed MAC addresses in the section discussing Layer 2 of the OSI model above and now we will discuss them in slightly more depth. MAC addresses are what machines on a local area network use to identify each other. A MAC address is a 12 digit address made up of hexadecimal numbers (0,1,2,3,4,5,6,7,8,9,A,B,C,D,E,F).

A MAC address looks like this:

94:10:3e:10:fd:21

That is actually the real MAC address of a WiFi router on our local area network. The first 6 digits are a vendor code. In this case the vendor is LinkSys. You can use WireShark’s MAC vendor lookup tool to determine that this MAC address belongs to a piece of hardware made by “Belkin International Inc”. In reality Linksys actually acquired Belkin in 2013, and so this router is a Linksys router but Linksys has used Belkin’s MAC address prefix on it. Sometimes vendor codes aren’t that easy to interpret.

When a machine has traffic for another machine on a local area network, it already knows the destination machine’s IP address. It needs to know what the hardware or MAC address is of the destination machine. So it sends out an ARP request (Address resolution protocol request) asking the network which machine has the hardware address it needs. The destination machine responds saying “Here is my MAC address”.

The machine that has data to send then stores that MAC address in an ARP table, also called an “ARP cache”. The ARP cache keeps track of IP address and ARP address mappings so that machines don’t have to send repeated ARP requests.

Once a machine knows another machine’s MAC address, it can send traffic directly to that machine on the local area network.

Your home WiFi router may have several network ports you can use to plug in an ethernet cable and have machines talk to each other using physical cabling. Those ports may be a ‘hub’ which means that the machines can all talk at the same time and they need to coordinate between each other regarding who gets to talk while the others stay quiet.

Or if you have a more expensive WiFi device, those ports may be a switch, which means that your machines can all talk at the same time and the switch will make sure that traffic is only sent between the ports of machines talking to each other. A switch does this by keeping a table of MAC addresses in it’s memory and it uses this as a kind of routing table to route traffic between ports. Don’t confuse a switch with a router. A router on the Internet uses IP addresses to route packets between different networks. A switch simply routes local packets between physical network ports using a table of MAC addresses similar to the ARP cache on your local machine.

Remember that MAC addresses are hardware addresses that identify physical machines and devices on your network. A MAC address is never used outside your local network and machines on the Internet don’t even know what your MAC address is. Only machines and devices on a local network talking directly to each other and making ARP requests between themselves know about each others MAC addresses.

IP: The Internet Protocol and IP addresses

As we’ve learned above, machines on a local area network all have MAC addresses. The machine or device you’re using right now has a MAC address and is on a local area network. But when it sends packets beyond that local area network, it can’t use its MAC address because only machines on your local area network or local WiFi know about your machine’s MAC address. That is where IP addresses become useful.

On a TCP/IP network like the Internet, all machines and devices connected have an IP address. These are logical addresses whereas a MAC address is a physical address that identifies a physical device. A logical IP address operates at layer 3 of the OSI model and is used to identify unique machines and devices on a wide area TCP/IP network like the Internet.

How IP addresses are structured

IP addresses look like four numbers (also called ‘octets’) to humans separated by dots. For example: 192.168.12.23 is a human readable IP address. This format for IP addresses is actually just the human readable way to write IP addresses. To machines, an IP address is actually just a number that ranges from zero to 4294967295. In programmer speak, an IP address is an integer (regular number without decimal point) that is unsigned (no numbers below zero) and is stored in 32 bits of address space (meaning that its maximum value is 2 to the power of 32 which is 4294967295).

So now you know the little secret that the Internet has been keeping from you: IP addresses are actually just numbers. Want to see something really crazy? The IP address for Wordfence.com is actually 69.46.36.16. You can enter that into your web browser and you would be taken to Wordfence.com. But you can also enter the IP address as a number which is: https://1160651792/ and that will also take you to wordfence.com because your browser recognizes that you’re just entering an IP address in its integer format.

In machines, IP addresses are stored as numbers which means we don’t need to store them as these long character strings of 192.168.123.125. Instead we can store them in 32 bits (remember, they’re 32 bit integers). That is the equivalent of only four characters of text which is really efficient. In Wordfence internally when we store IP addresses, for example IP addresses that have been blocked from your website, we store them as 32 bit integers because it’s way more efficient and we have functions that convert them back to human readable format before we show them to you in the list of blocked IP addresses.

How packets use IP addresses to cross the Internet

Lets say you want to connect to Google.com. Your machine will use the DNS system (described below) to look up the address of Google.com and connect to it. The address might be 216.58.216.142. Your machine will then send a packet to start the process of connecting to 216.58.216.142. This packet leaves your machine (or device), and is sent to your local router (also called your default gateway) which is, if you’re on a home network, usually your cable modem or ADSL modem.

Your local router receives the packet, looks up in its routing table to figure out where to send the packet and will usually send it on to one of your Internet Service Provider’s routers. That router will consult it’s own routing table and will figure out where to send the packet and forward it accordingly. Eventually the packet will arrive at the destination which is 216.58.216.142, also known as google.com. Google will reply and the whole process is reversed.

Routers on the Internet use routing protocols to talk to each other and share and coordinate routing information. They figure out what the shortest routes are with the help of their human administrators. If a route goes down because someone cuts an undersea cable, for example, routers will automatically select an alternative route. Routers get some help from their human administrators when the admins tell the routers which route has a higher or lower cost. BGP or Border Gateway Protocol is an example of a routing protocol used on the Internet to coordinate routes between routers. Cisco has an in-depth guide on different routing protocols you might find interesting if you want to learn more about routing protocols.

Network Address Translation or NAT

Let’s take a closer look at what is happening on your local network. Firstly, most home networks use an IP range that starts with 192.168.1.X where X is a number from 1 to 255. You may also use 10.1.1.X. These are actually private network ranges and can be used by anyone on their own private home network or office network. They’re called RFC1918 ranges and your router does something special called Network Address Translation or NAT which allows you to use a private range of IP addresses. NAT makes your whole network look like a single IP address to the wider Internet while in reality you have many devices hiding behind that single IP Address.

With NAT, your ISP only has to assign you a single IP address. That is the IP address that your NAT router uses on it’s “external interface” (The side of the router facing the public Internet). Then on your internal network you can use as many IP’s you want provided you use an RFC1918 range of IP addresses. There are actually several of these ranges and you can read more about them on this page on wikipedia. By far the two most popular ranges for private networking are 192.168.X.X and 10.X.X.X.

Subnets, Subnet Masks and understanding CIDR notation

Note: This section goes into some depth on how subnet masks work and how to do some basic binary arithmetic to calculate how many machines can exist on a subnet. If you don’t want to go into this much depth you can skip this section. But you should at least know the following: A subnet mask is used to define a subnet (a local network) and how many addresses can exist on that subnet. There is the classic subnet format e.g. 255.255.255.0 and there is the CIDR notation which looks like this: 69.46.36.0/27 (for example). You can use a subnet calculator to calculate subnet info for either of these formats. Read on if you would like to learn about subnets in greater depth.

When you configure a machine to use TCP/IP, you’ll often just check the box saying that it’s using DHCP or that it “get’s it’s IP address automatically”. We’ll explain how DHCP works in a moment, but first, lets look at what you need to set up if you configure a machine manually. This will give you a great understanding of how routing happens on the Internet.

When you configure a machine manually for TCP/IP you need to set up three things: The IP address, the subnet mask and the default gateway. In reality you’ll also add a DNS server address, but this is only used for name lookups and technically a machine doesn’t need this to do pure TCP/IP communication. So forget about DNS for now.

The IP address is easy: It’s usually an address that is assigned to you by a hosting provider or ISP.

The default gateway is really easy to understand. It is just the local router on your network that your machine will need to talk to if it wants to send traffic out to the Internet.

The subnet mask is difficult to understand, so we’re going to take a moment to understand subnets and subnet masks. This will help you understand who owns networks and how they’re built.

When you’re given a subnet mask it might look like one of the following. These are two examples of subnet masks:

- 255.255.255.0

- 255.255.255.224

From a subnet mask you can tell how many machines are allowed on your network, what your network address is and what your broadcast address is.

A subnet mask is actually a binary number. Lets take the first example, 255.255.255.0

In binary it looks like this:

11111111.11111111.11111111.00000000

What this tells us is that all the ones are the network part of our addressing scheme and all the zeros can be used by us to allocate addresses on our local network. So if we’re given an IP address of 192.16.1.26 with a subnet mask of 255.255.255.0 it means that our network starts at 192.168.1.0 and ends at 192.168.1.255. Because we know that we can use the last number for all our host addresses.

There’s one slight catch: You can use the first or last addresses in your network for any of the machines on your network. So in our example, you can’t use 192.168.1.0 and you can’t use 192.168.1.255. The first address in your network is your network address and is used to identify your network, so you can’t use that. The last address in your network is your broadcast address and you can’t use that either. If you try to send traffic to the broadcast address, you may get a reply from all the machines on your network because it’s considered a network-wide broadcast.

So far it seems fairly easy. We know that the subnet mask shows you what the range of addresses is in your network and it gives you a way to figure out your network address and your broadcast address. But remember that the subnet mask is binary and it might not be as easy as 255.255.255.0 which is 11111111.11111111.11111111.00000000. In that example you get to use 0 to 255 as the last number (or octet in network terms) for your network which is really easy to do.

What if your netmask is 255.255.255.224? In binary that looks like this:

11111111.11111111.11111111.11100000

You can see the usual pattern of all one’s until you get to the “host” portion of the address that defines which addresses you can hand out on your network. The number 224 in binary is 11100000 which is why we get that last octet in the binary subnet mask above. That tells you that only the zeros can be used when you’re handing out addresses on your network.

The easiest way to understand this is to take an example. Let’s use a real-world example. At Wordfence our data center gave us a block of IP addresses. They said our network address is 69.46.36.0 and our subnet mask is 255.255.255.224. They also told us that our “default gateway” or router is 69.46.36.1. From this we needed to figure out which IP addresses we can actually use.

We know that in binary we can only change the last 5 numbers because our subnet mask in binary says so. Remember our subnet mask of 255.255.255.224 in binary is: 11111111.11111111.11111111.11100000. We’re going to teach you a trick to calculate how many addresses you’re allowed and from there you can figure out your IP range.

- Use a calculator in programmer mode.

- Convert 224 into binary which gives you 11100000

- You can see that you have 5 zeros which tells you that only those last 5 binary digits are allowed to change on your subnet.

- Do the following math: 2 to the power of 5 is 32.

- Yay, it’s that simple. You’re allowed 32 addresses!

So the trick is to take the last part of your subnet mask and convert it to binary, look at how many zeroes you have and just say “two to the power of the number of zeroes gives me the number of addresses”. In this case 2 to the power of 5 gives us 32. Super easy.

Now that we know we’re only allowed 32 addresses, we can take our real-world example and figure out what our address range is:

- We know we’ve been given 69.46.36.0 as our IP address range.

- We know our subnet mask is 255.255.255.224

- We know we’re allowed 32 addresses from our math above.

- So our IP address range is: 69.46.36.0 to 69.46.36.31.

- Note that the range is from 0 to 31 which is 32 addresses. Don’t make a “fencepost error” and think the range is from 0 to 32. It is actually 0 to 31 because 0 is an address too and you need to count that.

So now that we know our range is 69.46.36.0 to 69.46.36.31, we also know that our first address is our network address and we’re not allowed to use that. Our network address is 69.46.36.0. We also know that our last address is our broadcast address and we can’t use that either. Our broadcast address is 69.46.36.31.

We know that our router or default gateway is 69.46.36.1 because our hosting provider told us that. So from this we know that we can use the addresses from 69.46.36.2 to 69.46.36.30 inclusive for machines and devices on our network. Awesome!!

At this point we know how subnet masks work when they’re given to us in the form of: 255.255.255.0 or 255.255.255.224 or something similar. We just convert that last number into binary using a calculator and the number of zeros gives us a way to calculate how many addresses are on our network.

What if your hosting provider or someone else gives you a subnet mask that looks like this:

69.46.36.0/27

Don’t freak out just yet. This is easy now that you understand that subnet masks are just binary and there’s a really simple trick to this. Firstly, we know that our subnet mask is 32 binary digits. All that the above text is telling you is that the first 27 digits are ones. That /27 above says that.

Because we know that subnet masks are 32 digits, that means we have 5 zeroes (32 minus 27 is 5).

Now that we know we have 5 zeroes we know that we have “two to the power of the-number-of-zeroes” addresses on our network. So what is two to the power of 5? It’s 32. So again, we have 32 addresses on our network.

The above notation where they give you a network address followed by a forward slash and a number is called CIDR Notation. It is a shorthand network-geek’s way of telling you what your network address and subnet mask is. You’ll see this notation used when you look up who the owner of a network is using the Whois utility, described below. CIDR is very common among network engineers and if you understand it you can add one more point to your geekiness. (That’s a good thing!)

Congratulations, you’ve just understood one of the most challenging things in TCP/IP: Subnet masks. Remember, to configure a machine manually on a TCP/IP network, we need 3 things: An IP address, a subnet mask and a default gateway. The subnet mask gives us a ton of useful information because it tells us what other IP addresses are on that network, what the broadcast address is and what the network address is. The first IP address in the range of usable addresses is usually the default gateway (also called router), and so you can even make a pretty good guess at what your default gateway address is going to be.

DHCP and how machines get IP addresses automatically

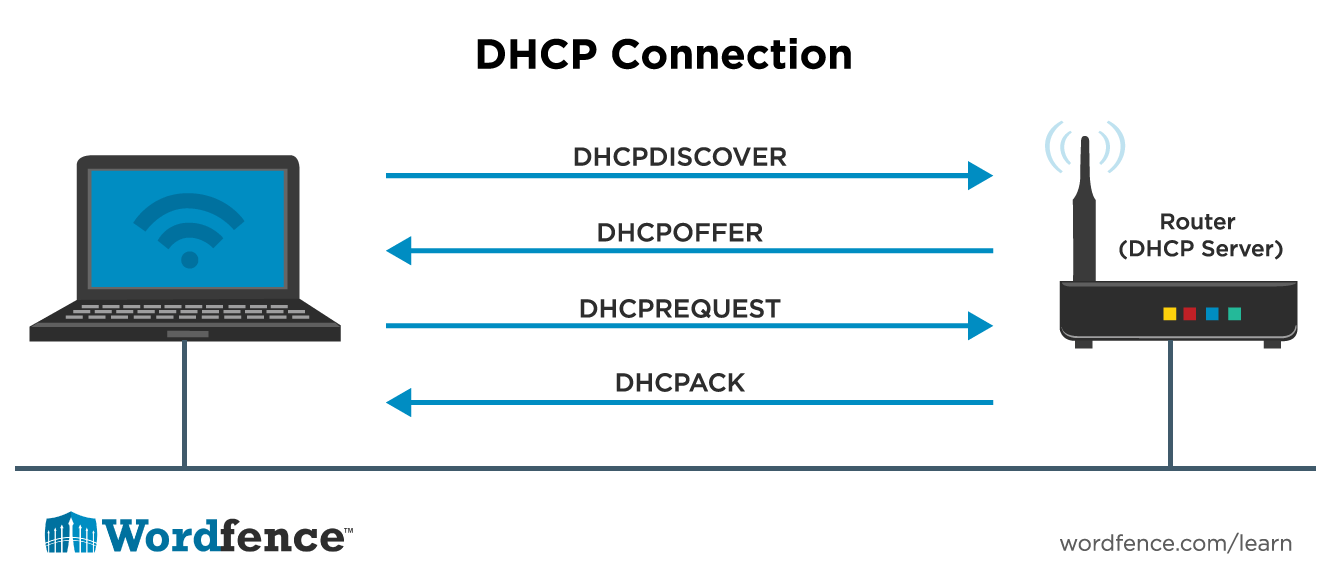

DHCP or Dynamic Host Configuration Protocol sounds fancy but it’s not. In the subnet mask section above we explained how to configure a machine manually with an IP address, subnet mask and default gateway. DHCP is a way for machines to figure this information out themselves.

Most home and office WiFi networks use DHCP. You simply check a box that says you want the machine to get its IP address automatically and it goes ahead and does that. Here’s how it works:

- When a machine has DHCP selected, when it connects to the network it sends out a broadcast packet asking if there are any DHCP servers available. This is called a DHCPDISCOVER packet.

- A DHCP server will respond with a DHCPOFFER packet which says “I’m available if you would like me to help you”. This response usually comes from your WiFi router which also runs a DHCP server. Or if you are on a office network, your network administrator may have set up a DHCP server on your file server or domain controller.

- Then your machine will respond with a DHCPREQUEST which says “Yes, I’d love to have you send me network configuration information”.

- And finally the DHCP server responds with a DHCPACK which contains all the network configuration information that your machine needs. This includes those three essential things for TCP/IP networking: a subnet mask, IP address and default gateway. It also usually includes the address for a DNS server that your machine can use to convert names into IP addresses. (More on DNS below)

It’s important to note that a DHCP server “leases” an address to a machine for a period of time, usually several days. The lease may “expire” and at that time the machine will have to ask for another address. Usually a DHCP server will keep giving a machine the same address, but occasionally it will change.

DHCP is usually only used on home and office networks and by Internet Service Providers who need to hand out IP addresses dynamically. In a hosting environment, individual IP addresses are handed out on a per machine basis because it is important that the address of a machine does not change. These are called “static addresses” if they are manually assigned vs “dynamic addresses” if a DHCP server is handing them out.

DNS – The Domain Name System

Imagine an Internet where instead of typing ‘google.com’ in your web browser you had to type in 173.194.123.73 and you had to remember that number. That would be very difficult, because for some reason words are easier to remember than numbers for us humans. So the DNS system was created that lets us assign word names to IP addresses.

When you type google.com into your web browser, your machine or device immediately sends off a DNS request to find out what the IP address of google.com is. It gets a reply of 173.194.123.73 or something similar and your browser then connects to that IP address. Sounds simple, right? Actually, it’s a little more complicated.

Here’s what really happens when you enter google.com into our web browser and hit enter. We aren’t going to go into too much detail because DNS is complex and a full explanation of DNS lookups is beyond the scope of this article. Here is the simple version of how DNS works:

- First, your machine will check to see if it has an entry for google.com in its ‘hosts’ file. That is a text file that exists on your machine in a special directory. This file can contain IP to host name mappings. If it does, then instead of doing a DNS lookup, your browser will just use what is in the ‘hosts’ file.

- Next your machine will contact your local DNS server. That is the server that you configured when you set up TCP/IP or the DNS server that your local DHCP server handed to you. Your machine will ask this local server what the IP address of google.com is.

- Your local DNS server may have this entry cached (stored) and may answer you immediately if it did the lookup recently. If not, it will go out onto the Internet and find the DNS server responsible for google.com. (We’re glossing over this part. You can find a more complete explanation here.)

- Your local DNS server will then reply to you with the IP address of google.com or it may tell you it could not find the answer.

- Once your machine has the IP of google.com, it stores (caches) that IP address so it doesn’t have to keep asking your local DNS server the same question.

- Your browser then connects to google.com using its IP address.

We’ve glossed over how your local DNS server connects to other DNS servers on the Internet to track down what the IP address of google.com is. Unless you are doing some serious DNS debugging or planning to host your own DNS server, you don’t need to know this.

An important item to note above is that your machine first consults its ‘hosts’ file before making a DNS request. You can edit your ‘hosts’ file to fool your browser into connecting to a different machine using the same name. For example, if your website is www.example.com and the IP is normally 1.2.3.4, you can change that IP to be 1.2.3.9 by editing your hosts file. You can then host a test version of your website on 1.2.3.9 without your customers seeing it and that you can access by typing www.example.com into your web browser. Hostgator has a helpful description of how to change your hosts file on various operating systems.

TCP or Transmission Control Protocol and Ports

IP gives us a way to pass packets across the Internet and is the layer 3 mechanism that gives us a way to ensure that packets get routed and arrive at their destination. Moving higher up the OSI model we need a way to have machines establish a connection between each other. TCP gives us that mechanism and it lives at layer 4.

TCP is used extensively on the Net because most web traffic is sent using it. Web browsers establish TCP connections to web servers before they can begin sending their request and receiving data. So it’s worth gaining a full understanding of how TCP works.

Servers that are listening for a TCP connection do so on a specific port number. Network ports are numbered from 0 to 65535 (2 to the power of 16). In general, a server waiting for a connection listens on a port below 1024. Although there are plenty of servers that listen on a higher port – for example memcached server listens on port 11211. Web servers listen on port 80 for HTTP and port 443 for HTTPS. Other TCP servers include mail (port 25), SSH (port 22) and FTP (port 21). For a list of TCP and UDP ports and what they’re used for, this document on Wikipedia contains a fairly complete list.

A ‘client’ or connecting machine uses a port to receive data that is randomly assigned and is always above 1024. A client will open a connection to a server by connecting to its port. Multiple clients can connect to the same server port and the operating system and application will handle differentiating between each connection and keeping track of who said what.

Inside a packet on the Internet there are “flags”. These flags are like little switches that you can turn on or off. An IP packet has various flags and so does a TCP packet. So when you think about flags, just think about a TCP packet arriving with several switches on and several off, and the ones that are on mean something.

How connections are established in TCP

TCP is a connection oriented protocol. That simply means that for data-flow to happen using TCP, you need to establish a connection. That is one of the most useful things about TCP: That you have a connection you can rely on. So with TCP, you get confirmation that both sides are listening rather than just sending packets into the ether and “hoping for the best”. You also get guaranteed delivery with TCP and if a packet goes missing, TCP will make sure that the missing packet is retransmitted. You can think of TCP as the FedEx of the Internet with a tracking number and insurance on your package.

It’s important to point out that TCP is a layer 4 protocol which is quite low on the OSI model. The actual establishing of TCP connections is handled by the operating system e.g. Windows, OS X or Linux. It is not handled by the web browser or web server, but the underlying operating system. The OS provides an “IP stack” which includes the ability to establish TCP connections. So when a web browser wants to establish a connection, it asks the operating system to do the establishing and gets back a connected “socket” it can use for communication. If you want to adjust the performance of TCP connections, you need to change an operating system setting, not a setting in your web browser or web server.

When a TCP connection is established, there is a three way handshake that takes place. Lets take an example of a web browser wanting to connect to a web server.

- When the browser’s operating system starts the connection it sends a packet with the SYN flag set.

- The web server’s operating system will respond with a packet with the SYN and ACK flags set.

- The browser’s operating system will then send a third packet with the ACK flag set.

Once this three way handshake is complete, the connection is established and data transfer can take place. This three way handshake happens every time a web browser connects to a new web server. Notice we say ‘new’ web server, because a web browser will reuse a connection to send multiple requests.

How TCP connections are closed

There are several ways a TCP connection can be closed. The first method is:

- The browser OS wants to close a connection to a web server and sends a packet with the FIN flag set.

- The web server OS sends back a packet with the ACK flag set which acknowledges the request to close the connection.

- The web server OS then sends back a packet with the FIN flag set indicating it also wants to close the connection.

- The browser OS then sends back a packet with the ACK flag set indicating it is acknowledging the close request.

At this point the connection is closed. More commonly, step 2 and 3 above are combined into a single step, so when the web server OS responds, it sends back a packet with both ACK and FIN flags set. This saves one step in this process and the connection closes faster.

There is another way that connections are closed. Instead of sending a FIN flag, the machine that wants to close the connection sends an RST flag. When a machine sends an RST flag it is saying that “there is no connection and I won’t listen to anything else you have to say on this connection”. Usually an RST flag is sent when, for example, two machines on the Internet had a connection but one of them is rebooted. In that case one of the machines still thinks there is a connection active and might try to send traffic. The machine that was rebooted responds with an RST which immediately closes the connection on the machine that thought there was still a connection active.

TCP is a Reliable Protocol

TCP includes other features to ensure that data is reliably delivered. It will reassemble data that is fragmented during transit. It will put packets back into the correct order if they’re received out of order – in a case where a packet transmitted second arrives first, for example. TCP will also detect packet loss and missing packets will be retransmitted by the sender.

TCP uses checksums to detect errors and if an error occurs, TCP will make sure that an error-free version of the packet is retransmitted. TCP also detects duplicate packets and will discard any duplicates.

TCP also includes flow-control which lets a receiving machine or device tell the sender how much data it can accept before the sender needs to wait for acknowledgement. Flow control in TCP uses a ‘sliding window’ where the receiver specifies how much data it can handle before, for example, it runs out of buffer memory. A smartphone may not be able to handle as much data as a workstation.

TCP includes congestion control where a sender will use several algorithms to determine whether the network is congested and if it should wait before sending more data. The sender uses the speed at which it receives acknowledgments from the receiver on the other side of the network to infer how much congestion there is.

As you can tell, TCP is a complex protocol. Now that you understand the basic connection setup and tear-down, you will be able to better diagnose network connection problems and you will be able to identify the start and end of connections during analysis which is an excellent start to working with TCP.

UDP or User Datgram Protocol

Now that you understand the basics of TCP, UDP is incredibly simple. UDP also uses ports just like TCP. A server listens on a port for UDP packets and a client listens on its own port for replies to its packets. UDP does not include packet-loss detection, does not detect duplicate packets and does not verify if a connection is established and that the other end is listening. UDP also doesn’t detect if packets are received out of order. UDP does include basic error detection using checksums.

So why would anyone in their right minds use UDP? It’s simple: performance.

Understanding Latency

Imagine you are face to face with a friend having a conversation. Your words reach your friends ears instantly and they’re able to respond to you very quickly. You play a game where you count to 10 and each person says an alternating number. You say “one”, your friend says “two” as soon as he hears you, you say “three” as soon as you hear him say “two” and so on. You’re able to get to ten very quickly because you can hear each other instantly.

Now imagine you and the same friend are standing on separate mountain tops that are separated by a deep and wide valley. It’s a perfectly calm day with no wind or background noise. If you shout across the valley, it takes about 3 seconds for your voice to reach the other side where your friend can hear you. Your friend can see your mouth moving in the distance but has to wait until he can hear what you said before he is able to reply.

Now you’re playing the same game described above, each counting alternate numbers as soon as you hear the other. It takes much longer because when you say “one” it takes 3 seconds for your friend to hear it before he can say “two”. It takes another three seconds for you to hear his “two” before you can say “three”. So it takes way longer for you to get up to 10. That is latency. It’s the round-trip lag that occurs in communication.

Satellite and undersea cable providers have been struggling with latency for a long time because electronic signals travel at the speed of light, which is only 300,000,000 meters per second. That’s slow if you are waiting for a signal to travel from Russia to the United States (about 7,462,400 meters) and back before you can get any actual work done.

The Need for Speed

Now that you understand latency, you probably have already guessed why UDP is superior to TCP. You don’t get the reliability of TCP, but you also don’t need to set up connections with a three-way handshake. Remember, if you are using TCP, before data transfer can take place, you have a three-way handshake that needs to establish a connection. That three-way handshake is three trips between client and server machine. When you consider how slow the speed of light travels (and therefore electronic signals), traveling from the United States to Japan, and back again, and back to Japan AGAIN, is a slow process and waiting for connection establishment might make many applications unusable.

Not only that, but with TCP, you have a sliding window where you can only send so much data before you need to stop and wait for the other side to acknowledge. This also slows things down.

UDP might not give you reliability, but if we want to get a message from the United States to Moscow FAST, we would use UDP. Using UDP we can send a single packet to a machine in Moscow and we don’t need to establish a connection or even wait for acknowledgement. The Internet is reasonably reliable so we can be fairly confident that the packet arrived at its destination. If you are building applications that aren’t mission critical, like streaming video or online games, UDP is perfect for the job.

Online gaming developers in particular love UDP because it massively reduces latency. Instead of doing three-way handshakes, waiting for connections to be established or data to be acknowledged, game developers have been able to use UDP to send bursts of data whenever data is available. This keeps online games fast.

How are errors handled with UDP?

The UDP protocol lives at layer 4 of the OSI model. The protocol itself doesn’t include error detection or correction. So if you are an application developer, creating an application that lives at layer 7 of the OSI, you need to include that error detection and correction yourself. You may choose to ignore many kinds of missing packets. For example, it doesn’t matter if you miss an update of a characters position in a game because you’ll get the next one and they’ll just skip a step. But what if a character in a game dies? It’s important that the other side receives that transmission, so a client may send that to a server and expect the server to send a UDP packet with an acknowledgement back within a reasonable time. If it doesn’t arrive, the application detects the loss of information and retransmits, not the UDP protocol.

So with UDP, error detection and correction are built into the layer 7 application, not the layer 4 UDP protocol.

What else is UDP used for?

UDP is used for gaming, streaming video and DNS. The reason DNS uses UDP is because the first thing your web browser does before connecting to a web server is send off a DNS request to determine which IP belongs to a domain name. A small piece of data is sent and a small response is received. UDP is perfect for this because it is low latency and a single packet is sent and received. Other applications include DHCP which we’ve already discussed and SNMP (Simple Network Management Protocol), which provides a way to send and receive network management data.

HTTP and HTTPS

We have worked our way up the OSI model from Layer 2 where machines pass data directly to each other on a LAN using MAC addresses, via layer 3 which is where IP addresses exist and packets are routed on the wide-area-network that is the Internet, through layer 4 which is where connections are established using TCP or we have connectionless packets sent via UDP. We’re now going to skip up to Layer 7 which, along with Layer 2, 3 and 4 is one of the layers of the OSI that is critically important to understand.

Most interesting internet protocols live at layer 7 and that includes HTTP and HTTPS which is the protocol that web browsers use to talk to web servers. Let’s explore HTTP in a little more depth.

HTTP connection, request and response

When a web browser connects to a web server to make an HTTP request and hopefully receive an HTTP response, the following steps take place:

- The browser does a DNS lookup to determine what the IP address of the web server is.

- Once it has the IP address, it asks the operating system to establish a TCP connection with the web server.

- A three-way TCP handshake ensues and if all goes well, the web browser machine establishes a TCP connection to port 80 of the web server.

- At this point HTTP takes over and the web browser sends an HTTP request to the web server. We’ve already explained how DNS, IP and TCP work, so you have the preceding three steps covered. We’re going to explore HTTP requests and responses in more depth in this section.

- Once the web server receives the HTTP request on port 80, it does some work and sends back an HTTP response over the established TCP connection.

- The web browser will show the user the results in the browser display panel.

- If keep-alive is enabled on the web server, the browser can reuse the connection to send additional HTTP requests and get responses. Connection reuse saves time because it saves the web browser from doing a three-way TCP handshake every time it wants to send a request to the web server. If you recall our discussion on latency, you’ll understand why this is a helpful optimization.

- Eventually the user may close the browser window, move on to another site or the connection might just time-out. In either case, the connection is closed.

Now that you understand how TCP connections are established, understanding how a simple HTTP connection is established is easy. Most of the work is done by TCP. Let’s discuss how an HTTPS connection works before we move into the details of the HTTP protocol.

HTTPS connections

HTTPS is a term used to describe HTTP communication between browsers and servers that happens wrapped inside SSL or TLS. Let’s explain this in a little more detail. SSL is an older secure communications protocol that reached version 3.0 of development and then ended. TLS is simply the newer version of SSL and TLS version 1.0 is actually SSL version 3.1. So don’t get confused when you hear some people say SSL and others talk about TLS and others refer to SSL/TLS. They’re talking about the protocol that allows secure HTTP communication and TLS is just the newest version of SSL.

TLS version 1.1 and 1.2 also exist and the current version of TLS is version 1.2. TLS 1.3 is a working draft (work in progress). From now on we’ll simply refer to TLS rather than SSL or SSL/TLS, since TLS is the newest version and name for SSL.

When you think of secure browser communication you think of HTTPS. As we’ve mentioned above, HTTPS is actually HTTP which exists at layer 7 of the OSI which rides on top of a secure channel. That secure channel is established by TLS at layer 5 and layer 6 of the OSI model. So to be clear, HTTPS is a combination of HTTP at layer 7 and TLS at layer 5 and 6.

Because TLS lives lower down on the OSI model, when an HTTPS connection is established, the first thing that happens is a TCP connection is established (Layer 4), then TLS establishes a secure channel (Layer 5 and 6) and then communication starts via HTTP (Layer 7).

Let’s look at the steps that occur when an HTTPS connection is established. We’ll refer to the browser machine as the ‘client’ and the web server as the ‘server’.

- The client OS establishes a TCP connection to the server OS on port 443 which is assigned to HTTPS using a three-way handshake.

- Once the connection is established, the client sends TLS (or SSL in older browsers) specifications to the server telling it what version of SSL or TLS it is running, what cipher suites it wants to use (encryption and hashing algorithms) and what compression methods it wants to use.

- The server then checks to see what the highest version of SSL or TLS it can use and picks that. It picks a cipher suite from the list the client sent. It may also pick a compression method.

- The server then sends the client which algorithms it has chosen.

- Following that, the server sends its cryptographically signed public key (the certificate) and information about the session key to the client.

- The client receives this cryptographically signed certificate and it verifies that a trusted certificate authority (CA) has signed the certificate and that it is valid. This lets the client know that they can trust the certificate they received from the server.

- The client then sends information to the server confirming the selected encryption method and indicates it is ready to begin secure communication.

- The server then sends a message that it is also ready to begin secure communication.

Once a secure channel has been established, the communication between client and server when using HTTPS is very similar to HTTP.

These steps give you a very basic introduction to how TLS or SSL sets up a secured channel at layer 5 or 6 of the OSI model. We haven’t gone into any detail on how public/private key signing and encryption works or how TLS uses symmetric key encryption instead of public key encryption to transfer most of the data.

The HTTP Request

Now that a client has connected to a server, they’ve completed the three-way TCP handshake and if it’s an HTTPS connection, they’ve negotiated and set up a TLS connection, lets look at the HTTP conversation.

HTTP is fairly simple. A client connects to a server, tells the server which document it wants, includes some helpful information like preferred language and browser version, and the server sends the client what it asks for. The information in the request is included as headers. The first line is called the ‘request method’. Each ‘header’ is the text to the left of the colon character in the request below. For example, the request includes a ‘Host’ header, a ‘User-Agent’ header and they are collectively called the ‘request headers’. Remember: This is what the browser sends the server. Here’s an example of a real request with it’s ‘method’ and ‘headers’:

GET / HTTP/1.1 Host: qa1.wordfence.com User-Agent: Mozilla/5.0 (X11; Linux x86_64; rv:31.0) Gecko/20100101 Firefox/31.0 Iceweasel/31.7.0 Accept: text/html,application/xhtml+xml,application/xml;q=0.9,*/*;q=0.8 Accept-Language: en-US,en;q=0.5 Accept-Encoding: gzip, deflate Cookie: wp-settings-time-1=1441863249 Connection: keep-alive

What you see above is exactly what a browser sent our qa1.wordfence.com server when sending a request. Lets dissect it line-by-line and you’ll discover it’s incredibly easy to understand how the HTTP protocol works by example.

GET / HTTP/1.1

This first line is the browser telling the web server that it is sending a GET request instead of a POST request or one of the several other request methods. This is called the ‘request method’. The forward slash that follows is the path and in this case the browser is telling the server it wants the home page. It could have said /test.html instead if it wanted that page.

What follows after the forward slash is the protocol, in this case HTTP/1.1 or HTTP version 1.1 in plain english. Every modern browser supports HTTP and has since the late 1990’s. HTTP 1.1 introduced the idea of virtual hosting, where many websites can exist on the same IP address. Most shared WordPress hosting plans use virtual hosting which gives you an idea of how wide-spread virtual hosting is. If a browser does not support HTTP 1.1, it would not be able to see most of the World’s websites.

HTTP/2 was published as a standard in 2015 and browser support for it has only recently been added.

If the browser uses the POST method instead of GET, it means it wants to send some additional data with the request. POST is almost always used for web forms where the web browser needs to include the form contents with the request. When a POST request is sent, the browser will send the request headers followed by a carriage return and the encoded form data.

Host: qa1.wordfence.com

In our discussion of the GET line above, we mention that virtual hosting appeared in HTTP/1.1 in the late 1990’s and it allows websites to host multiple sites on a single IP address. The ‘Host:’ line in an HTTP request is what makes this possible. If we have 20 websites hosted on IP address 69.46.36.16, and we visit that IP with a web browser, there is no way for the web server on that IP address to know which website to serve unless the browser tells the web server what it wants. That is where the ‘Host:’ header comes in.

By specifying which ‘host’ the browser wants, the web server knows which website is being requested and internally the web server routes the request to that website’s configuration. This technique is called ‘virtual hosting’ and it is why a ‘Host:’ header is included.

User-Agent: Mozilla/5.0 (X11; Linux x86_64; rv:31.0) Gecko/20100101 Firefox/31.0 Iceweasel/31.7.0

This is one of the most useful headers included and if you are a web site admin or developer you will find yourself occasionally poring over a User-Agent header. This header tells the web server who makes the web browser, what its name is, what version the web browser is and which operating system it is running on. It even includes some information about the operating system.

If you’re privacy conscious you might wonder at how much information is disclosed when all you wanted was a simple web page. Every request you make on the web includes this much information about your web browser and operating system.

In this case we’re using a browser called Iceweasel version 31.7.0 running on Linux. We can also tell that the version of Linux is 64 bit.

The User-Agent header is used by some web servers to serve different content to different browsers. This practice is generally discouraged and it is called user-agent sniffing.

Accept: text/html,application/xhtml+xml,application/xml;q=0.9,*/*;q=0.8

The ‘Accept’ header is where the browser tells the server what kinds of documents it accepts. In this case it will accept HTML and XHTML+XML. It is also telling the web server that it will accept XML or anything else when it specifies the ‘*/*’ string and it includes some information telling the web server what it prefers.

The ‘q’ parameters give a browser a way to tell a web server what it prefers. If a media type (e.g. text/html) is not followed by a q= parameter, then it means that the level of preference is 1. If it is followed by a q parameter, the value will usually be below 1 and will indicate a level of preference.

So the above Accept: header can be interpreted in english as: “I prefer text/html and application/xhtml+xml. But if you don’t have those available, I’ll take application/xml and if you don’t have that I’ll take anything else as my least preferred option.”

As you can see, the ‘q’ parameter generally indicates lower preference media types.

Accept-Language: en-US,en;q=0.5

The Accept-Language header is fairly simple. The browser tells the web server which languages it prefers using language tags. These tags are coordinated by IANA (The Internet Assigned Numbers Authority) and the first part of the tag is a ISO-639 language abbreviation. The second part of the tag (after the dash) may not be present, but if it is, it is a ISO-3166 country code.

The ‘Accept:’ section above explained how ‘q’ parameters allow a browser to specify preference to a web server. In the Accept-Language header above, we have a web browser telling a web server that it prefers United States English, but will take plain old English as a second choice.

Accept-Encoding: gzip, deflate

Encoding is very useful because it lets a web server encode or compress content before it is sent to a web browser. In this browser’s request it is telling the web server what encoding it accepts. In this case it is able to decode ‘gzip’ and ‘deflate’ encoding. Both are compression techniques which substantially reduce the amount of bandwidth required to transfer documents across HTTP.

Cookie: wp-settings-time-1=1441863249

This is a cookie request header. Or, put in colloquial web-speak, this is the part where your web browser sends the web server a cookie. If you are interested in Internet privacy or what cookies your website is storing and receiving, this header is what you will be inspecting to get that information.

In this case the cookie name is wp-settings-time-1 and the value is 1441863249.

A web browser can send multiple cookies to a web server. This is what it looks like:

Cookie: wp-settings-time-1=1441863249; wordpress_test_cookie=WP+Cookie+check

In this case there are two cookies being sent. We’ve discussed the first one already. The second one starts after the semi-colon and the name is wordpress_test_cookie. The value of the second cookie is WP+Cookie+check.

When analyzing cookies you may see a very long line after the ‘Cookie:’ header where several cookies are included and separated by semi-colons. The cookie values may be quite long in some cases which makes the cookie header very long.

Both the values above are not encrypted, but frequently cookie values are encrypted so that the data being sent can’t be seen by someone monitoring the network or even the web browser owner.

Connection: keep-alive

This is the web browser telling the web server that it wants to keep the connection open. If the web server agrees it will respond with “Connection: keep-alive” in its response. The connection will then be kept open rather than the browser and server having to establish another TCP connection with another three-way handshake and another TLS connection if they’re using HTTPS.

Keep-alive is the default in HTTP/1.1 so if this header is not included the connection will be kept open automatically. As you can tell, keep-alive is a nice performance improvement for browsers because the browser and server don’t have to keep reestablishing connections and setting up TLS if necessary. However, for every open connection a web server needs to manage that connection.

Some web servers like Apache (in prefork mode) use significant resources per connection so it may be preferable to disable Keep-alive. Servers like Nginx use very little resources to manage each connection and so keep-alive is rarely disabled on an Nginx web server.

The HTTP Response

Now that we’ve seen a web browser send a real request to a web server, lets take a look at what an HTTP response looks like in that same conversation. The following is a real server responding to the HTTP request we saw above:

HTTP/1.1 200 OK Date: Mon, 21 Sep 2015 01:03:47 GMT Server: Apache/2.4.10 (Ubuntu) X-Pingback: https://qa1.wordfence.com/xmlrpc.php Vary: Accept-Encoding Content-Encoding: gzip Content-Length: 3029 Keep-Alive: timeout=5, max=100 Connection: Keep-Alive Content-Type: text/html; charset=UTF-8

After the response headers there is a carriage return and then a block of encoded text which we will explain below. The encoded text looks like the following image capture when viewed in a terminal. As you can see it is binary that the browser needs to decode.

Lets discuss each part of the response in detail.

HTTP/1.1 200 OK

This is the heading of the response and it again specifies the protocol being used, in this case HTTP/1.1. It also includes a response code and a status, in this case “200 OK”. You are probably already familiar with another response code: “404 NOT FOUND”. Here are several other common response codes:

- 301 Moved Permanently – This is referred to on the Web as a permanent redirect.

- 302 Found – This is called a temporary redirect and it indicates a document has is temporarily being hosted on another URL

- 304 Not Modified – If a web server sends a conditional request, which says “only give me the document if it has changed”, then it will receive this response if the document has not been modified. This is an example of browser caching.

- 403 Forbidden – If you fail basic authentication on the Web (a specific type of web authentication) a web server will return this.

- 500 Internal Server Error – If the web server application encountered a critical error (it crashed or stopped execution) the server will return this.

- 503 Service Unavailable – You will see this if a website is being overloaded with traffic and can’t handle any more requests.

HTTP response codes all start with a number that indicates their category.

- Codes starting with 1 are informational.

- 2 codes indicate success

- 3 codes indicate ‘redirection’ and that further action needs to be taken by the browser to complete the request

- 4 codes indicate client errors caused by the web browser.

- 5 codes indicate server errors.

Date: Mon, 21 Sep 2015 01:03:47 GMT

The ‘Date’ response header is the time the message was generated.

Server: Apache/2.4.10 (Ubuntu)

The ‘Server’ header shows you what web server the website is running. This data is sometimes hidden by a website administrator. They might just include a short server name like ‘Apache’ which does not divulge information that might be useful to hackers. The Netcraft Web Server Survey uses this header to determine web server market share.

X-Pingback: https://qa1.wordfence.com/xmlrpc.php

It used to be the case that non-standard response headers could have an X- prefixed to indicate they are non-standard or proprietary. For example, companies like Automattic included some recruiting information as an X- header on their web server.

Then some of these X- headers started becoming standards. For example X-Forwarded-For is a de facto standard now that indicates a real client IP if it’s behind a proxy server. So non-standard headers becoming standards became a little confusing. In 2012 the IETF published RFC6648 which suggested that we get rid of X- headers and just call custom headers by a descriptive, helpful and unique name in case they become standards.

Many developers don’t actually realize that X- headers are no longer fashionable or even desirable. So you still see a lot of custom HTTP response headers being created and prefixed with an ‘X’ and a dash.

In the case of the X-Pingback, this is a web server telling you that if you publish an article and link to this website, you should notify the website of the link using the ‘pingback‘ URL of: https://qa1.wordfence.com/xmlrpc.php

Vary: Accept-Encoding

The ‘Vary’ header is used by caching proxies and caches like Varnish. To understand this section on ‘Vary’ you need to understand how caching proxies work.

In this case the ‘Vary’ header tells a caching proxy that if browsers have different values for the ‘Accept-Encoding’ header when they send their request, the proxy needs to cache separate versions for each variation of the Accept-Encoding header.

Lets take a simple example:

- A web browser sends a request to a proxy server for a web page and includes “Accept-Encoding: gzip”

- The proxy contacts the web server and fetches the page. It stores a copy (caches) the page and sends the results on to the web browser.

- Another web browser sends a request but does not include the ‘Accept-Encoding’ header. This browser can’t accept compressed pages so it’s not OK for the proxy to just send it the stored version because that version is compressed. So the proxy contacts the web server again, sends a new request with no Accept-Encoding header and stores another uncompressed version of the page and sends the results to the browser.

- At this point the proxy server is storing multiple versions of the page because it knows, thanks to the ‘Vary’ header that it needs to consider pages that are fetched using different browser ‘Accept-Encoding’ headers as new variations of the page, hence the name the Vary header.

As you can see, the ‘Vary’ header is used to tell proxy servers that cache on the Internet how to store pages and when to consider a version of a web page unique based on how it was fetched.

Content-Encoding: gzip

The ‘Content-Encoding’ response header simply tells the web browser how the content was encoded. In this case it was gzip compressed and is therefore compressed binary data. This header tells the web browser to uncompress the content before it is displayed.

We’ve included a screenshot above that shows you what the gzipped data actually looks like.

Content-Length: 3029

This header is self explanatory and tells the web browser how long the content is. The browser can use this to determine if all the data has been received yet and whether it should start decompression (if the content is compressed) and displaying the page.

Keep-Alive: timeout=5, max=100

The Keep-Alive response header tells the browser that it can keep a connection it’s not using open for a maximum amount of (in this case) 5 seconds before the server will close it. The ‘max’ parameter tells the browser that it can send a maximum of 100 requests on that connection (with less than 5 seconds between each request) before the connection will be closed.

Connection: Keep-Alive

As we discussed in the Request section above, this tells the client (web browser) that the server will allow keep-alive and will hold the connection open.

Content-Type: text/html; charset=UTF-8

The ‘Content-Type’ header tells the web browser what kind of content it is receiving. In this case the server is about to send text/html (which is plain old HTML).

This header includes a ‘charset’ parameter that tells the web browser that the character set used is UTF-8.

The Content and New-Lines that Follow the Headers

The HTTP response does of course include content. In this case it is compressed and as you can see from the screenshot above, the compressed data is binary and when viewed compressed in a text viewer it looks like garbage code.

The headers themselves are followed by new lines. These new lines are actually carriage returns and line feed characters at the end of each line. If a browser receives a list of headers and then two new lines, it knows the rest of the response is the content body.

Bringing it All Together: A Packet’s Journey Through the OSI Layers and Across the Internet

We have discussed networking starting with an introduction to the OSI model and we then travelled from the physical layer on the bottom of the OSI model all the way to the application layer at the top.

At this point you should have a visual in your mind of how a packet travels from an application, down the OSI model, across the Internet and back up the other side. To help you fully understand the flow through the OSI model, we have included a short video that illustrates a packets journey across the Internet.

Conclusion

We hope that this article has given you a clear understanding of the OSI model and a foundation in Networking on the Internet. There are many other protocols that exist at each layer of the OSI and we have focused on a few specific protocols to help you understand networking on the Web. For further reading, we encourage you to learn more about ICMP at layer 3 (how errors are sent) UDP at layer 4 (for connectionless data transfer) and the many application protocols that exist at layer 7 like SMTP for email and FTP for file transfer.